As Smart Grid continues to transform the entire power industry, some of the initial focus around Smart Grid and Demand Response (SG & DR) has been in the areas of deploying Smart Meters and reading these through Automated Meter Reading (AMR) and Advanced Metering Infrastructure (AMI). As Meter Data Management products and applications are being employed to collect the data for various Smart Grid initiatives such as advanced billing, real-time pricing and managing grid reliability - the enormous increase in data volume has prompted a broader look at not only how to access data, but how to subsequently manage, analyze and utilize the data. The missing link from accessible to actionable comes down to a lack of strategic IT infrastructure.

Tony Giroti, CEO

BRIDGE Energy Group, Inc.

Unfortunately, the power industry has traditionally been a laggard in adopting Information Technology (IT), either because of a lack of funding or the absence of business drivers mandating the development of a strategic IT architecture. As a result, the motivation for creating a strategic IT architecture, also known as an Enterprise Architecture (EA), has not been compelling to date.

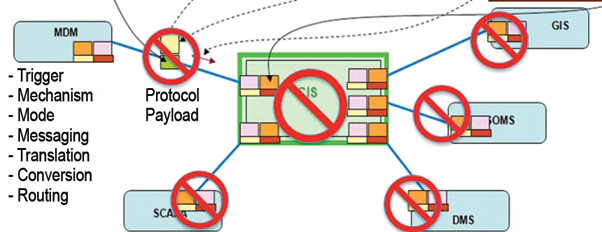

IT as an afterthought – especially around application integration – continues to be the norm for a majority of Smart Grid pilots across North America. Most utilities focused exclusively on deploying Smart Meters, communication infrastructure and Meter Data Management (MDM) products in their pilot phases have not included developing a strategic integration architecture that ties MDM data with other enterprise applications such as Outage Management System (OMS), Customer Information System (CIS), Geographical Information System (GIS), Distribution Management System (DMS), and Supervisory Control and Data Acquisition (SCADA).

This is primarily due to the fact that up until now, the industry has had minimal real-time integration demands. In fact, most integration needs have historically been met tactically through a one-off and projectbased approach. IT has never had the motivation, the business drivers or the budget to develop a strategic architecture or develop a standardized approach to integration. Application and data integration requirements have been met through a tactical approach based upon any available technology or middleware offered by the application or system vendor.

As a result, quick point-to-point interfaces that are non-standard and custom-coded have evolved as the norm, simply to achieve short-term objectives. Compounding the issue, many of these interfaces are batch rather than real-time, with database links and proprietary code that is customized by writing more code within the application.

The Current State of Integration

How does P2P custom code become problematic as MDM products pave the way in many Smart Grid pilots? Take the example of the popular approach of connecting MDM with CIS in a point-to-point manner. That may work for low volume and low transaction pilots but will not scale to production quality volumes and bi-directional communication models as needed. Moreover, if the CIS is ever to be replaced, the MDM integration with CIS will require redesign and rework. This highlights the case that a point-to-point integration approach is not scalable, precludes future upgrades, and increases risk to the organization, as any change to the application would have a “ripple effect” on other downstream applications.

Example- Connecting MDM with CIS – Everything must be hand coded.

If you replace or add an Application, the entire Ecosystem will be affected.

Moreover, the integration gap continues to widen over time as custom code is written for each P2P interface. The viral impact of the point-to-point architecture continues to reduce the overall integration capability, making each change riskier than the one prior. Data continues to be locked in silos and sharing becomes a significant challenge over time. This growth over time has resulted in what is referred to as “Accidental Architecture” where custom code is required to handle all aspects of communications between applications. Thus, the current tactical approach that served companies well in the past will not scale to support the larger vision of Smart Grid and Demand Response.

The back office has not been a major area of focus, which has created a gap in strategic IT architecture.

Smart Grid Architecture, the P2P Antidote

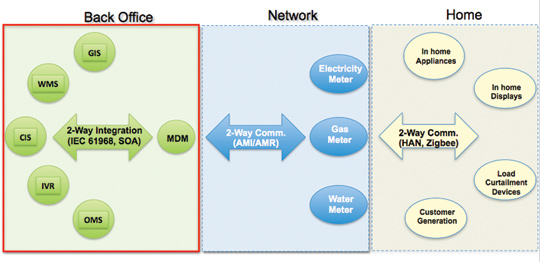

As an alternative to P2P architecture, there is a more strategic “Smart Grid Architecture” that specifically addresses integration and interoperability challenges. Smart Grid and Demand Response initiatives will require real-time integration of applications and systems to enable real-time communication and timely sharing of data to make informed decisions. The tools and infrastructure that are required to realize the Smart Grid architecture and vision will vary from project to project and may include: SOA toolset, development tools, configuration management tools, a source control tool, infrastructure for development, testing and production, etc.

Real-time Enterprise Architecture

One of the key aspects of the Smart Grid Architecture is to provision real-time decision making, which is possible only if data can be harnessed as it is generated (without much latency) and is applied towards a specific objective that requires data as it happens. Such capabilities are possible only with a Real-Time Enterprise Integration Architecture (RTEIA), where immediacy of data is critical and data flows seamlessly between applications and systems (with appropriate governance and security controls).

This real-time or “active” data has significantly more value than the static and old data as it can be harnessed to make just-in-time decisions, such as automated outage detection through the last-gasp meter data for proactive customer service and proactive self healing of the grid; detection of current load and critical peak conditions to initiate automated load curtailment programs to curtail power at participating C&I customer premises, or to perform air conditioning load curtailment at participating retail households. Non real-time integration requirements via batch-data or “passive” flow of data can be leveraged appropriately for non real-time decision-making. Both active and passive data has value and can be used strategically.

Event Driven Architecture

Another key aspect of the Smart Grid Architecture is its ability to manage hundreds, or thousands, or even millions of transactions in such a way that events are generated, detected, and processed with pre-defined business logic and predictable conditions. An event can be considered as any notable condition that happens inside or outside your IT environment or your business. Usually, an event is detected as data and message flows between applications. An event in general could be a business event – such as detection of an outage condition or a system event such as failure of the MDM application to collect meter data. An event may also signify a problem, an exception, a predictable error, an impending problem, an opportunity, a threshold, or a deviation from the norm.

Given the transaction volume generated by Smart Meters, Smart Grid Architecture would also require a Management by Exception (MBE) capability where any error related to the integration of data and messages between systems and applications is captured, a trend identified and eventually addressed within a meaningful timeframe. In this case, MBE alludes to the capability where an abnormal condition, such as an exception, or an error requires special attention without any significant overhead or management on the rest of the system.

The Smart Grid Architecture should include an Event Driven Architecture (EDA) capability to process events as and when they occur with minimal human intervention.

The Future of Smart Grid Architecture

It is at this vital crossroad that the power industry has two choices:

- To be proactive and have a strategy for managing Grid Operations & IT transformation through a strategic Smart Grid integration architecture; or,

- To be reactive and tactical in responding to problems as they appear.

The latter approach is risky and will prove to be a major impediment to Smart Grid success. However, for those willing to take the proactive road, success or failure could rely squarely on the approach to solving the core IT challenges. For Meter Data Management, there are the inherent challenges of data volume, transaction performance, event handling and database performance as outlined below.

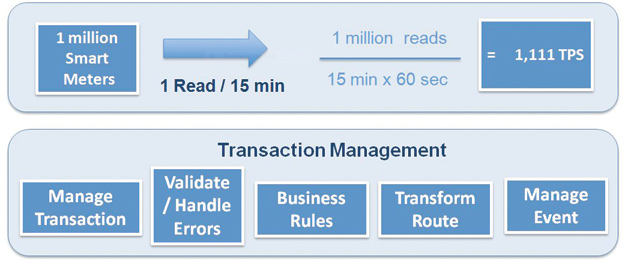

The Data Volume Challenge

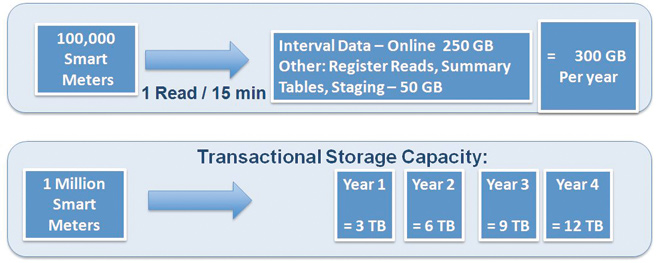

As Meter Data Management products and applications are being employed to collect the data for various Smart Grid initiatives, these programs call for collecting huge volumes of meter data on a 15-minute interval basis. For a million meters, this data amounts to roughly 1,111 TPS (transactions per second).

Transaction Volume =1,000,000/(15 x 60) = 1,111 tps

Challenge: High Transaction Volume & Distributed Transactions

If each transaction is 1,000 bytes (1Kb) then 1Kb x 1111 transactions = 1,111 Kb are required per second. This is equal to 1Mb of data gathering and storage per second. Data Collected Per Hour = 1 MB x 60 x 60 = 3.6GB. This is equal to 85GB per day; 2.6TB per month; and 30 TB per year.

Challenge: Data Volume & Performance

Transactional data collected from customer meters can quickly reach staggering proportions that will require significant storage capacity and an information life cycle management approach to managing the data based upon some strategic approach where the value of data or at least that level of granularity, will gradually diminish over time.

Transaction Performance Challenge

Transaction performance is critical to the success of any system. Many SG & DR projects are hitting performance bottlenecks due to architectural constraints. Energy companies might consider the TPC-APP Benchmark™ as a way to measure their application performance. TPC-C is a transaction processing benchmark that can be used to do performance related planning that might be required to manage the transaction load and throughput.

Consider an AMI/AMR project that requires collection of data from a million smart-meters at 15-minute intervals. Per the previous section, the transaction volume is equal to 1,111 tps. This is equal to over 90 million transactions per day. The sheer handling of such transaction load may be a significant challenge and will require careful planning and selection of the appropriate communication technologies and MDM vendors. In addition to collecting the data, an organization will need to manage performance and storage challenges.

Event Handling Performance Challenge

A Complex Event Processing infrastructure is required to detect system and business events. This infrastructure will need to detect events “just-in-time”. With over 90 million records, the detection of a “needle in a haystack” must work day after day, month after month, with little to no room for error.

If any of the transactions is a “last gasp”, then such events will require tracking and action. One could assume that there may be 0.01% chance or 1 in every 10,000 meters that may send a last-gasp every day. As a result there may be about 100 last-gasp messages per day that require a business action like automated self-healing or a work order creation and crew-dispatch. Either way, such a transaction needs to be processed when it occurs.

Database Performance Challenge

A large volume of transactions will also need to be written into the database. At the rate of 1,000 - about 90 million transactions may be written in a day. In some instances, to narrow down an outage, the last gasp meter data may need to be accessed from the ever-growing transactional database (as shown in Data Volume section) resulting in significant performance bottlenecks in database I/O. In this example, if there is 30 days worth of data in the database, then database records that will have to be searched = 90 million x 30 days = 2.7 billion records. This may result in serious database performance issues. Optimizing the database indexes and parallelizing the databases will be a pre-requisite.

Leveraging the Data – We’re Going to Need a Bigger Boat

There are many other IT challenges that that must be addressed as organizations launch SG & DR programs. However, one final challenge for the purpose of this article is the issue of reporting and leveraging data warehouses. To date, corporate or enterprise data warehouses have not been a norm in the power industry. Reporting needs have been met traditionally through the use of operational reports taken directly from the transactional systems. Going forward, the status quo is not the recommended approach, due to the fact that when real SG & DR programs are launched, IT will have a transactional database requiring high throughput and large data volumes, as previously illustrated. In addition, reporting off of transactional systems may reduce application performance and impact other critical systems.

Additionally, once organizations are able to mine volumes of usage, outage data, peak load and other market and operational data that will be collected from Smart Meters and other applications, this information will need to be sourced and consolidated from disparate systems such as meter readings from MDM, operational data from SCADA, customer data from CIS and outage data from OMS. With so much actionable intelligence at stake, transactional systems should not be used to perform reporting. Instead an enterprise data warehouse should be developed that leverages data from the transactional system to do historic reporting, trend analysis, ad-hoc reporting, “what-if” analytics, better planning, and forecasting. Such data can also be used to improve customer service, lower cost of operation, increase grid reliability, and improve market operations.

Successfully tackling these challenges will enable organizations to clearly execute on their vision of developing a Real-Time Integration Architecture that will serve as a foundation for all SG & DR programs.

Making Smart Grid a Reality

Given the critical role that IT systems will play in concert with MDM and AMI/AMR projects, many of the decisions for Smart Grid & Demand Response initiatives will originate from the programmable business rules, and SG & DR applications resident within the IT realm. Transactions such as triggers to connect/disconnect a customer‘s Smart Meter could originate from the CIS application, perhaps based upon a change in customer status, or an outage pattern could be detected based upon consistent “last-gasp” reads from a localized set of meters.

The ambitious objectives of Smart Grid, when combined with some early warning signs from those who‘ve embarked on the journey, indicate that the role and complexity of IT is being grossly under-estimated, and that IT is going to play a more prominent, if not dominant, role in making Smart Grid a reality. The Power industry needs to take a careful, hard look at these indicators, do appropriate course correction and reconcile with the role that IT will play in Smart Grid and Demand Response programs. IT will need to develop a Strategic “Smart Grid Architecture” as opposed to an “Accidental Architecture.”

The bottom line is that IT systems will be integral to increasing the reliability of the grid and empowering customers with new demand response programs as more smart meters are deployed across the nation. Organizations implementing SG & DR programs based on strategic vision, planning and an architectural approach will ultimately be the leaders in making Smart Grid a reality.

About the Author

Tony Giroti is the Chairman & CEO of BRIDGE Energy Group Inc. and has over 23 years of experience in managing information technology products, platforms and applications. He is a Board Member of OASIS and member of the Smart Grid Architecture Committee, U.S. NIST. Tony is the former Chairman of the IEEE; Past President of the IEEE Power Engineering Society; Chairman CISA/ISACA New England; and former President, CEO and Chairman of two venture capital-backed global technology companies. Mr. Giroti is a certified information systems auditor (CISA) and has been granted four patents in the areas of SOA/XML/IT platforms.

After completing his Bachelor of Science in Electrical Engineering, Mr. Giroti trained at Crompton Greaves Ltd. in the Power Systems division designing large transformers. He also holds a Master of Science in Electrical and Computer Engineering from the University of Massachusetts.